My worst fear had just come true. For about 2 hours straight, my distributed web crawler had been running and crawling flawlessly. But then, it suddenly stopped. The master node was completely unresponsive; it was not responding to commands and not logging anything. "What the hell just happened?", I said to myself. After all, my perfect, finely tuned Python code ran flawlessly on my development machine1 and I had successfully deployed it and tested it on my brand new CentOS virtual machines (VMs). So why on Earth would it suddenly fail?

Here follows the full story of my startling - but laborious - encounter with Python debuggers.

Attempt #1: top + gdb

To begin with, I thought about running the top command to see the state of the Python process:

top - 19:47:17 up 2 days, 2 min, 2 users, load average: 5.35, 3.39, 2.49

Tasks: 100 total, 3 running, 97 sleeping, 0 stopped, 0 zombie

%Cpu(s): 98.5 us, 0.4 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 1.1 st

KiB Mem : 500840 total, 6296 free, 231824 used, 262720 buff/cache

KiB Swap: 511996 total, 481356 free, 30640 used. 241472 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

25906 acme 20 0 1109824 201788 9772 R 98.7 40.3 45:53.56 python

1 root 20 0 41212 2228 1380 S 0.0 0.4 0:04.51 systemd

2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd

3 root 20 0 0 0 0 S 0.0 0.0 0:00.35 ksoftirqd/0

5 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kworker/0:0H

The python process was taking slightly more than 200 MB of RAM and almost 100% of the CPU. The former was kind of expected, but the sudden spike in CPU usage was very suspicious. Clearly, the code was stuck somewhere, but it just didn't make sense that it was eating up all those precious CPU cycles. So I thought about attaching a debugger to the process. But to be honest, I was not that familiar with Python debuggers. Until then, I'd been using PyCharm's debugger on my laptop, which is really nice and powerful, but unfortunately my code had been deployed and was running on a VM with CentOS 7 as the host OS (with no GUI), so using PyCharm's debugger from a nice IDE was not an option2.

I had a few other alternatives:

- pdb, which I already knew. Unfortunately, I realized that you can't attach pdb to an already running process. Additionally, pdb is not good at debugging multithreaded programs3.

- Winpdb, which supports multithreaded programs and remote debugging, and has a very nice GUI. So it would make things convenient to debug in remote from my laptop. However, I found out that Winpdb cannot attach to an already running process, unless it has previously been started with its own server (rpdb2).

- gdb, apparently the ultimate debugger for Linux, which can attach to a running process. This looked like exactly what I needed.

So I was more than eager to give gdb a try4:

gdb python 25906

(gdb) thread apply all bt

Thread 19 (Thread 0x7f6291ea2700 (LWP 25908)):

#0 pthread_cond_timedwait@@GLIBC_2.3.2 ()

at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238

#1 0x00000000004ba86f in PyCOND_TIMEDWAIT (cond=0x925c60 <gil_cond>,

mut=0x925ca0 <gil_mutex>, us=5000) at Python/condvar.h:103

#2 0x00000000004bb18d in take_gil (tstate=0x19be840) at Python/ceval_gil.h:224

#3 0x00000000004bbd5f in PyEval_RestoreThread (tstate=0x19be840) at Python/ceval.c:443

#4 0x00007f629bc986a6 in floatsleep (secs=0)

at /home/acme/debug/Python-3.3.6/Modules/timemodule.c:1544

#5 0x00007f629bc9671c in time_sleep (self=0x7f629bf118d0, args=0x7f629c1f1300)

at /home/acme/debug/Python-3.3.6/Modules/timemodule.c:260

...

#26 0x000000000052b50b in t_bootstrap (boot_raw=0x7f6291fae5e0)

at ./Modules/_threadmodule.c:993

#27 0x00007f62a325bdc5 in start_thread (arg=0x7f6291ea2700) at pthread_create.c:308

#28 0x00007f62a287fced in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:113

...

Thread 17 (Thread 0x7f6290de0700 (LWP 25910)):

#0 0x00007f62a28802c3 in epoll_wait () at ../sysdeps/unix/syscall-template.S:81

#1 0x00007f6295fa0546 in zmq::epoll_t::loop (this=0x1ad7c70)

at bundled/zeromq/src/epoll.cpp:156

#2 0x00007f6295fa079c in zmq::epoll_t::worker_routine (arg_=0x1ad7c70)

at bundled/zeromq/src/epoll.cpp:189

#3 0x00007f6295fa54ca in thread_routine (arg_=0x1ad7cf0) at bundled/zeromq/src/thread.cpp:96

#4 0x00007f62a325bdc5 in start_thread (arg=0x7f6290de0700) at pthread_create.c:308

#5 0x00007f62a287fced in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:113

...

Thread 3 (Thread 0x7f626cff9700 (LWP 25924)):

#0 0x0000000000544b7b in sre_umatch (state=0x7f626cff26b0, pattern=0x1aea9b8)

at ./Modules/_sre.c:1065

#1 0x0000000000547cd6 in pattern_match (self=0x1aea900, args=0x7f62880d76f0, kw=0x0)

at ./Modules/_sre.c:1932

...

#37 0x000000000052b50b in t_bootstrap (boot_raw=0x7f6291fae940)

at ./Modules/_threadmodule.c:993

#38 0x00007f62a325bdc5 in start_thread (arg=0x7f626cff9700) at pthread_create.c:308

#39 0x00007f62a287fced in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:113

...

Thread 1 (Thread 0x7f62a3683740 (LWP 25906)):

#0 pthread_cond_timedwait@@GLIBC_2.3.2 ()

at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238

#1 0x00000000004ba86f in PyCOND_TIMEDWAIT (cond=0x925c60 <gil_cond>,

mut=0x925ca0 <gil_mutex>, us=5000) at Python/condvar.h:103

#2 0x00000000004bb18d in take_gil (tstate=0x1508660) at Python/ceval_gil.h:224

#3 0x00000000004bbd5f in PyEval_RestoreThread (tstate=0x1508660) at Python/ceval.c:443

#4 0x00007f629bc986a6 in floatsleep (secs=2)

at /home/acme/debug/Python-3.3.6/Modules/timemodule.c:1544

#5 0x00007f629bc9671c in time_sleep (self=0x7f629bf118d0, args=0x7f626582bfb0)

at /home/acme/debug/Python-3.3.6/Modules/timemodule.c:260

...

#24 0x00000000005262a7 in run_file (fp=0x151d990,

filename=0x7f62a3645040 L"crawler/crawler.py",

p_cf=0x7ffdd77788b0) at Modules/main.c:307

#25 0x0000000000526e71 in Py_Main (argc=4, argv=0x7f62a3643040) at Modules/main.c:744

#26 0x000000000041982a in main (argc=4, argv=0x7ffdd7778a98) at ./Modules/python.c:62

All I got was a bunch of cryptic, C-based stacktraces. But this all made sense after all: gdb was currently debugging Python's interpreter, which itself had been written in the C language, so its debug symbols were being used. From the above stacktraces then, I could only take a few wild guesses as to what the problem was:

- This looked like a classic case of deadlock, because the majority of the threads were in a wait state (e.g. threads 1 and 19).

- Some other threads (e.g. thread 17) were clearly related to my ZeroMQ queues, waiting indefinitely for incoming messages. This was kind of expected based on the deadlock hypothesis.

- One particular thread (3) apparently enjoyed being a black sheep. It was stuck in some kind of regular expression evaluation function. But at the time, I just didn't think much of it, as if it had been caused by some mysterious planet alignment. Read on and you'll have proof that I'd make a very bad astrologer.

The main problem I had here was that I just couldn't easily map those stacktraces to my Python code. It was not obvious. I needed some other way to attach to the process, and have Python-based stacktraces. Then I would have a better grasp of the problem.

Attempt #2: Winpdb

This is when I thought about giving Winpdb a try. Since this was a debugger specifically made for Python, I would finally be able to break directly into my code, and have a glimpse of the real problem. Well, at least in theory. This unfortunately implied that I would have to restart the Python process altogether and attach Winpdb directly from the start. But then, would I be able to reproduce the problem?

I definitely didn't feel like this was a one-hit wonder, so I proceeded with the necessary steps. As per Winpdb's documentation, and according to hints and bits found across the web, I was able to get a working debugging environment.

Installing the Winpdb client

These were the steps I performed on my Windows laptop:

- I downloaded the latest release of Python 2.7 and installed it5.

- I downloaded the latest release of wxPython and installed it6.

I downloaded and uncompressed the latest package of Winpdb.

cd c:\tmp curl -O https://storage.googleapis.com/google-code-archive-downloads/v2/code.google.com/winpdb/winpdb-1.4.8.zip 7z x winpdb-1.4.8.zipI installed and activated a Python 2.7 virtualenv:

cd winpdb-1.4.8 C:\Python27\Scripts\pip.exe install virtualenv C:\Python27\Scripts\virtualenv.exe env2.7 env2.7\scripts\activateAs per this discussion, I created symbolic links pointing to Python's 2.7 installation folder7:

cd env2.7\Lib\site-packages mklink /h wx.pth C:\Python27\Lib\site-packages\wx.pth mklink /h wxversion.py C:\Python27\Lib\site-packages\wxversion.py mklink /j wx-3.0-msw C:\Python27\Lib\site-packages\wx-3.0-msw\I tested out that wxPython was working as expected:

python >>> import wx >>> wx.version() '3.0.2.0 msw (classic)'Just to be sure, and per this discussion:

I downloaded the latest package of Python Cryptography Toolkit to encrypt communications for remote debugging8:

cd c:\tmp curl -O http://www.voidspace.org.uk/python/pycrypto-2.6.1/pycrypto-2.6.1-cp27-none-win_amd64.whlI installed the package:

pip install pycrypto-2.6.1-cp27-none-win_amd64.whl

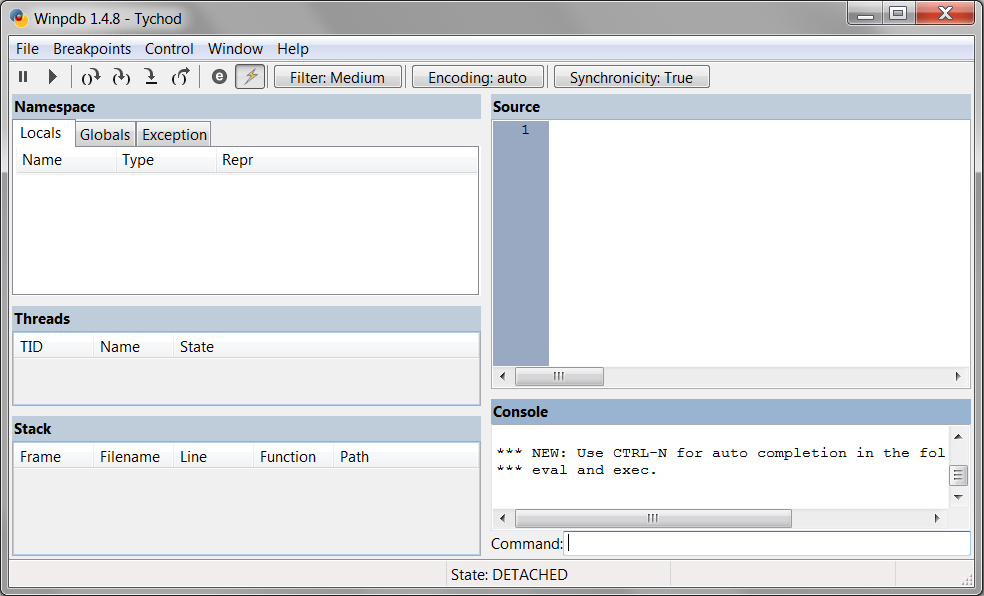

I ran Winpdb:

cd winpdb-1.4.8 python winpdb.pyAnd finally... some magic came from the skies:

Installing the Winpdb server (rpdb2):

These were the steps I performed on my CentOS VM:

I downloaded and uncompressed the latest package of Winpdb.

cd ~ wget https://storage.googleapis.com/google-code-archive-downloads/v2/code.google.com/winpdb/winpdb-1.4.8.zip unzip winpdb-1.4.8.zipI activated my Python 3.3 virtualenv:

source ~/crawler/env/bin/activateI installed the Python Cryptography Toolkit to encrypt communications for remote debugging (since I would connect remotely from my laptop... remember the awesome GUI?):

pip install pycryptoI copied winpdb-1.4.8/rpdb2.py to the folder containing the crawler's main source file:

cp winpdb-1.4.8/rpdb2.py ~/crawler/rpdb2.pyI edited the crawler's main source file to embed the debugger:

I edited crawler.py:

vim ~/crawler/crawler.pyI inserted the following block9:

import rpdb2 rpdb2.start_embedded_debugger("MY_EPIC_PASSWORD", fAllowUnencrypted=False, fAllowRemote=True, timeout=600)

I temporarily unblocked port 51000 in the firewall (note: this is the default port that rpdb2 listens on):

sudo iptables -A INPUT -s {MY_LAPTOP_IP_ADDRESS}/32 -p tcp -m tcp --dport 51000 -m state --state NEW,ESTABLISHED -j ACCEPT sudo iptables -A OUTPUT -d {MY_LAPTOP_IP_ADDRESS}/32 -p tcp -m tcp --sport 51000 -m state --state ESTABLISHED -j ACCEPTAs per this discussion, I patched rpdb2.py to make it work with Python 3.3:

I edited rpdb2.py:

vim ~/crawler/rpdb2.pyI commented out:

def __getsignal(signum): handler = g_signal_handlers.get(signum, g_signal_getsignal(signum)) return handlerAnd replaced it by:

def __getsignal(signum): sigval = None try: sigval = g_signal_getsignal(signum) except ValueError: return None return g_signal_handlers.get(signum, sigval)

Debugging my code with Winpdb

These were the steps I performed to debug the crawler:

On my CentOS VM, I ran the crawler:

cd ~/crawler source env/bin/activate python crawler.py --config data/crawler_config.jsonFrom my Windows laptop, I attached to the embedded debugger server running on my CentOS VM:

- From the Winpdb client, I chose File > Attach.

- In the Password dialog, I entered the password that I specified to the embedded debugger (e.g. MY_EPIC_PASSWORD).

- In the Attach dialog, I entered the IP address of my CentOS VM in the Host field. And then I clicked on Refresh.

- In the bottom list, I selected the script to debug (crawler.py) and hit OK.

- Once the client had attached, the debugger broke/paused on the first line of the main source file (crawler.py). I simply hit Go to resume execution.

Then, all I needed to do was wait approximately 2 hours until the deadlock would occur. All easy, right? Indeed, 2 hours later, the deadlock re-occurred. I was really excited to finally be able to break into my code and see what the problem was. But when I tried to hit the Break button in Winpdb, nothing happened. Winpdb had completely frozen. Sigh...

This is when I came upon this pearl in the documentation:

Asking Winpdb to break when the embedded Python interpreter is inactive will result in Winpdb becoming unresponsive.

That last statement was key: the Python interpreter was inactive. This is why I was unable to break into my code. But why was it inactive? Well, from the C-based stacktraces that I had previously collected with gdb (and that I thought were useless), I suddenly realized that the Python interpreter had given control to some low-level C libraries, and that it was stuck there. Unfortunately, this also meant that Winpdb, in my specific scenario, was completely useless. My inner astrologer had just taken another hit.

Attempt #3: top + gdb + python-debuginfo (hint: success!)

I thought that the best of all worlds would be to have a debugger capable of:

- Attaching to my Python process without any fuss.

- Displaying Python-based stacktraces for all threads.

- Displaying local variables and function parameters.

It turns out that such a debugger actually did exist and was in plain sight. The python-debuginfo extension was exactly what I needed. There was one small caveat however: it required a debug version of Python.

Compiling a debug version of Python

Update (21/09/2016): you don't necessarily need a debug version of Python to use python-debuginfo. You can just skip to the next section. Thanks to a fellow Reddit member for pointing this out.

Since I was using a specific version of Python (3.3.6) that was not directly shipped with CentOS, I had to re-compile it in debug mode. So basically:

I re-compiled my version of Python with the --with-pydebug switch10:

cd ~ mkdir debug tar -xvf Python-3.3.6.tar -C debug cd debug/Python-3.3.6 ./configure --with-pydebug --prefix=/home/acme/Python-3.3.6-dbg make make installI re-installed virtualenv (and thus pip)11:

cd ~ curl -O https://pypi.python.org/packages/8b/2c/c0d3e47709d0458816167002e1aa3d64d03bdeb2a9d57c5bd18448fd24cd/virtualenv-15.0.3.tar.gz tar xvfz virtualenv-15.0.3.tar.gz cd virtualenv-15.0.3 /home/acme/Python-3.3.6-dbg/bin/python3.3 setup.py install /home/acme/Python-3.3.6-dbg/bin/virtualenv ~/crawler/env-dbg cd ~/crawler source env-dbg/bin/activate pip install -r requirements.txt

Using the Python debugging extensions in gdb (python-debuginfo)

Now that I had a debug version of Python:

I ran the crawler once again and waited for ~ 2 hours (i.e. until the deadlock re-occurred):

python crawler.py --config data/crawler_config.json &I then retrieved its PID using top and attached to it using gdb:

gdb python {PID}I loaded the python-debuginfo extension from gdb12:

python import sys sys.path.insert(0, "/home/acme/debug/Python-3.3.6/Tools/gdb") import libpython endIn gdb, I listed all threads, which confirmed that they were all stuck in a wait state, except (unsurprisingly) thread #3.

(gdb) info threads Id Target Id Frame 19 Thread 0x7f6291ea2700 (LWP 25908) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 18 Thread 0x7f62916a1700 (LWP 25909) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 17 Thread 0x7f6290de0700 (LWP 25910) "python" 0x00007f62a28802c3 in epoll_wait () at ../sysdeps/unix/syscall-template.S:81 16 Thread 0x7f628bfff700 (LWP 25911) "python" 0x00007f62a28802c3 in epoll_wait () at ../sysdeps/unix/syscall-template.S:81 15 Thread 0x7f628b7fe700 (LWP 25912) "python" 0x00007f62a287569d in poll () at ../sysdeps/unix/syscall-template.S:81 14 Thread 0x7f628affd700 (LWP 25913) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 13 Thread 0x7f628a7fc700 (LWP 25914) "python" 0x00007f62a28802c3 in epoll_wait () at ../sysdeps/unix/syscall-template.S:81 12 Thread 0x7f6289ffb700 (LWP 25915) "python" 0x00007f62a28802c3 in epoll_wait () at ../sysdeps/unix/syscall-template.S:81 11 Thread 0x7f62897fa700 (LWP 25916) "python" 0x00007f62a287569d in poll () at ../sysdeps/unix/syscall-template.S:81 10 Thread 0x7f6288ff9700 (LWP 25917) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 9 Thread 0x7f626ffff700 (LWP 25918) "python" 0x00007f62a28802c3 in epoll_wait () at ../sysdeps/unix/syscall-template.S:81 8 Thread 0x7f626f7fe700 (LWP 25919) "python" 0x00007f62a28802c3 in epoll_wait () at ../sysdeps/unix/syscall-template.S:81 7 Thread 0x7f626effd700 (LWP 25920) "python" 0x00007f62a287569d in poll () at ../sysdeps/unix/syscall-template.S:81 6 Thread 0x7f626e7fc700 (LWP 25921) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 5 Thread 0x7f626dffb700 (LWP 25922) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 4 Thread 0x7f626d7fa700 (LWP 25923) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 3 Thread 0x7f626cff9700 (LWP 25924) "python" 0x0000000000544b7b in sre_umatch ( state=0x7f626cff26b0, pattern=0x1aea9b8) at ./Modules/_sre.c:1065 2 Thread 0x7f6267fff700 (LWP 25925) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238 * 1 Thread 0x7f62a3683740 (LWP 25906) "python" pthread_cond_timedwait@@GLIBC_2.3.2 () at ../nptl/sysdeps/unix/sysv/linux/x86_64/pthread_cond_timedwait.S:238Then, I printed the stacktrace of each Python thread (seriously, that moment was magic, I could almost see the light!):

(gdb) thread apply all py-bt Thread 19 (Thread 0x7ffb44731700 (LWP 27049)): Traceback (most recent call first): Waiting for the GIL <built-in method sleep of module object at remote 0x7ffb4e7a08d0> File "/home/acme/crawler/env-dbg/lib/python3.3/site-packages/pymongo/periodic_executor.py", line 103, in _run time.sleep(self._min_interval) ... ... Thread 15 (Thread 0x7ffb4266d700 (LWP 27053)): Traceback (most recent call first): <built-in function zmq_poll> File "/home/acme/crawler/env-dbg/lib/python3.3/site-packages/zmq/sugar/poll.py", line 99, in poll return zmq_poll(self.sockets, timeout=timeout) File "/home/acme/crawler/env-dbg/lib/python3.3/site-packages/zmq/auth/thread.py", line 49, in run socks = dict(poller.poll()) ... ... Thread 14 (Thread 0x7ffb41e6c700 (LWP 27054)): Traceback (most recent call first): Waiting for the GIL <built-in method sleep of module object at remote 0x7ffb4e7a08d0> File "/home/acme/crawler/core/messaging/distributed_queues.py", line 69, in _push_worker time.sleep(self._WORKER_DELAY_IN_SECONDS) ... ... Thread 3 (Thread 0x7ffb1b7fe700 (LWP 27065)): Traceback (most recent call first): <built-in method match of _sre.SRE_Pattern object at remote 0x2c8d7f0> File "/home/acme/crawler/web/config/web_crawler_config.py", line 79, in is_match return self.pattern.match(url) File "/home/acme/crawler/filters/url_filter.py", line 114, in _is_filtered if url_pattern.is_match(normalized_url): File "/home/acme/crawler/env-dbg/lib/python3.3/functools.py", line 275, in wrapper result = user_function(*args, **kwds) File "/home/acme/crawler/filters/url_filter.py", line 91, in is_filtered return self._is_filtered(self._url_normalizer.normalize(url)) File "/home/acme/crawler/management/crawler_dispatcher.py", line 119, in _dispatch_worker not self._url_filter.is_filtered(url.hyperlink.normalized_url) and \ ... ... Thread 1 (Thread 0x7ffb55f12740 (LWP 27047)): Traceback (most recent call first): Waiting for the GIL <built-in method sleep of module object at remote 0x7ffb4e7a08d0> File "crawler/crawler.py", line 123, in _process_user time.sleep(self._THROTTLING_WAIT_TIME_IN_SECONDS) File "crawler/crawler.py", line 99, in _process_users self._process_user(user, last_id) File "crawler/crawler.py", line 79, in run self._process_users(last_id) File "crawler/crawler.py", line 156, in <module> crawler.run()

Pretty much every stacktrace was marked as 'Waiting for the GIL', except for thread #3 (regular expression) and those related to ZeroMQ queues. I had the feeling that some problematic regular expression was to blame, not ZeroMQ. But why would Python's regular expression (RE) engine fail so miserably?

Debugging a Python C module with gdb

I didn't know much about the internals of the Global Interpreter Lock (GIL), so I dug a little bit more and found this very nice post by Jesse Noller. Basically, C modules can voluntarily release the GIL, but some long-running operations may not do so. That last bit seemed to be the problem. Was a regular expression running forever, thus not releasing the GIL? I clearly needed to validate this.

(gdb) thread 3

[Switching to thread 3 (Thread 0x7ffb1b7fe700 (LWP 27065))]

#0 _PyUnicode_IsWhitespace (ch=1) at Objects/unicodetype_db.h:4310

4310 }

(gdb) py-bt

Traceback (most recent call first):

<built-in method match of _sre.SRE_Pattern object at remote 0x2c8d7f0>

File "/home/acme/crawler/web/config/web_crawler_config.py", line 79, in is_match

return self.pattern.match(url)

File "/home/acme/crawler/filters/url_filter.py", line 114, in _is_filtered

if url_pattern.is_match(normalized_url):

File "/home/acme/crawler/env-dbg/lib/python3.3/functools.py", line 275, in wrapper

result = user_function(*args, **kwds)

File "/home/acme/crawler/filters/url_filter.py", line 91, in is_filtered

return self._is_filtered(self._url_normalizer.normalize(url))

File "/home/acme/crawler/management/crawler_dispatcher.py", line 119, in _dispatch_worker

not self._url_filter.is_filtered(url.hyperlink.normalized_url) and \

File "/home/acme/Python-3.3.6-dbg/lib/python3.3/threading.py", line 858, in run

self._target(*self._args, **self._kwargs)

File "/home/acme/Python-3.3.6-dbg/lib/python3.3/threading.py", line 901, in _bootstrap_inner

self.run()

File "/home/acme/Python-3.3.6-dbg/lib/python3.3/threading.py", line 878, in _bootstrap

self._bootstrap_inner()

(gdb) py-up

#8 Frame 0x7ffb40661440, for file /home/acme/crawler/web/config/web_crawler_config.py, line 79, in is_match (self=<UrlPattern(type='regex', pattern=<_sre.SRE_Pattern at remote 0x2c8d7f0>) at remote 0x7ffb4065aed0>, url='http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé

¸ãµããª&u=http://www.google.com')

return self.pattern.match(url)

(gdb) py-locals

self = <UrlPattern(type='regex', pattern=<_sre.SRE_Pattern at remote 0x2c8d7f0>) at remote 0x7ffb4065aed0>

Python Exception <type 'exceptions.UnicodeEncodeError'> 'ascii' codec can't encode characters in position 51-95: ordinal not in range(128):

Error occurred in Python command: 'ascii' codec can't encode characters in position 51-95: ordinal not in range(128)

So, apparently, self.pattern.match had raised a UnicodeEncodeError. I also saw that the test string was 'http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé¸ãµããª&u=http://www.google.com'. But I just couldn't easily print the regular expression which had been executed upon it (i.e. self.pattern.pattern13):

(gdb) py-print self

local 'self' = <UrlPattern(type='regex', pattern=<_sre.SRE_Pattern at remote 0x2c8d7f0>) at remote 0x7ffb4065aed0>

(gdb) py-print self.pattern

'self.pattern' not found

This was not working because the py-print extension only takes a single variable name14. Great... but there was another option. All I actually needed was to look at the parameters passed to the pattern_match C function.

(gdb) bt

#0 _PyUnicode_IsWhitespace (ch=1) at Objects/unicodetype_db.h:4310

#1 0x000000000053de86 in sre_category (category=13, ch=133)

at ./Modules/_sre.c:211

#2 0x00000000005431e3 in sre_ucharset (set=0x2c8dac8, ch=133)

at ./Modules/_sre.c:416

#3 0x0000000000543429 in sre_ucount (state=0x7ffb1b7f76b0, pattern=0x2c8dabc,

maxcount=4294967295) at ./Modules/_sre.c:504

#4 0x00000000005446c2 in sre_umatch (state=0x7ffb1b7f76b0, pattern=0x2c8d8a8)

at ./Modules/_sre.c:1012

#5 0x0000000000547cd6 in pattern_match (self=0x2c8d7f0,

args=('http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé

¸ãµããª&u=http://www.google.com',), kw=0x0) at ./Modules/_sre.c:1932

#6 0x00000000005fd8ad in PyCFunction_Call (

func=<built-in method match of _sre.SRE_Pattern object at remote 0x2c8d7f0>,

arg=('http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé

¸ãµããª&u=http://www.google.com',), kw=0x0) at Objects/methodobject.c:84

#7 0x00000000004ce1a8 in call_function (pp_stack=0x7ffb1b7f7f90, oparg=1)

at Python/ceval.c:4066

#8 0x00000000004c803f in PyEval_EvalFrameEx (

f=Frame 0x7ffb40661440, for file /home/acme/crawler/web/config

(gdb) frame 5

#5 0x0000000000547cd6 in pattern_match (self=0x2c8d7f0,

args=('http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé

¸ãµããª&u=http://www.google.com',), kw=0x0) at ./Modules/_sre.c:1932

1932 status = sre_umatch(&state, PatternObject_GetCode(self));

(gdb) info locals

state = {ptr = 0x7ffb284c6a46, beginning = 0x7ffb284c69f8,

start = 0x7ffb284c69f8, end = 0x7ffb284c6a6f,

string = 'http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé

¸ãµããª&u=http://www.google.com', pos = 0, endpos = 119, logical_charsize = 4, charsize = 1,

lastindex = -1, lastmark = -1, mark = {0x0 <repeats 200 times>},

data_stack = 0x7ffb2d4c3630 "\377\377\377\377\377\377\377\377",

data_stack_size = 8744, data_stack_base = 4048, buffer = {buf = 0x0,

obj = 0x0, len = 0, itemsize = 0, readonly = 0, ndim = 0, format = 0x0,

shape = 0x0, strides = 0x0, suboffsets = 0x0, internal = 0x0},

repeat = 0x7ffb46ca3180, lower = 0x53dc26 <sre_lower_unicode>}

status = 0

string = 'http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé

¸ãµããª&u=http://www.google.com'

string2 = 0x0

start = 0

end = 9223372036854775807

kwlist = {0x65a8d2 "string", 0x65a8d9 "pos", 0x65a8dd "endpos",

0x65a522 "pattern", 0x0}

To be sure, I looked at the source code of the pattern_match function. Its signature was:

static PyObject* pattern_match(PatternObject* self, PyObject* args, PyObject* kw)

So self was of type PatternObject. I then retrieved its value:

(gdb) info args

self = 0x2c8d7f0

args = ('http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé

¸ãµããª&u=http://www.google.com',)

kw = 0x0

(gdb) p (PatternObject *) 0x2c8d7f0

$1 = (PatternObject *) 0x2c8d7f0

By looking at the source code of the PatternObject struct, I saw that it had a pattern member. So I retrieved its value:

(gdb) p $1->pattern

$3 = '^https?:\\/\\/(?:www\\.)?(?:\\w{2}\\-\\w{2}\\.)?(?:acme\\.com|ac\\.com)\\/(?:(?:\\S+\\/?)+)$'

Fantastic! This was the problematic regular expression that I'd been looking for the entire time! What a relief! But... wait a minute. It looked perfectly normal, and it had been working really well until then. True, but there was one subtle detail that I hadn't really paid attention to: the test string was split into two different lines because it contained the sequence '\r\n'. Did it really make any difference? This is what I validated by running this script on my laptop:

import re

def test_regex():

regex = re.compile("^https?:\\/\\/(?:www\\.)?(?:\\w{2}\\-\\w{2}\\.)?(?:acme\\.com|ac\\.com)\\/(?:(?:\\S+\\/?)+)$")

result = regex.match("http://www.acme.com/redirect.php?src=bm&t=ã¤ããã軽æ¸ãããªãèé\r\n¸ãµããª&u=http://www.google.com")

pass

if __name__ == "__main__":

test_regex()

And BAM! The Python interpreter completely froze upon calling regex.match. When I looked at the CPU usage of python.exe, it was very high, just like on my CentOS VM. "What a weird bug!" I thought. A bit of googling revealed that this was not a real bug per se, but an instance of what we call catastrophic backtracking. In my particular case, this happened because:

- There were nested quantifiers in my regex, i.e. (?:\S+\/?)+

- The test string contained the sequence \r\n, which caused the regex to run almost forever because of all the backtracking it had to do.

Unsurprisingly, other people had already encountered the exact same problem with Python's RE engine. Now, fortunately, the fix was quite simple. I simply had to remove the nested quantifiers from my regex. So I ended up with:

regex = re.compile("^https?:\\/\\/(?:www\\.)?(?:\\w{2}\\-\\w{2}\\.)?(?:acme\\.com|ac\\.com)\\/\\S+$")

And TADAM! Problem solved!

Final thoughts

As we've seen in this post, figuring out how to debug a multithreaded Python program can be quite hard for a novice Linux developer. But once you've played with a few debuggers, things look much simpler in perspective. In summary:

- Knowing your tools is important to be efficient at debugging. For instance, I could have saved a lot of time if I had known, from the start, that gdb was the right tool for the job. So:

- Winpdb is good for easy and fast debugging of Python code. But it's not good for debugging C modules. Moreover, you can't easily attach it to an already running process just like gdb.

- gdb is good for low-level debugging of Python code, thanks to the python-debuginfo extension. And it's perfect for debugging deadlock-like scenarios.

- You have a clear advantage if you know your development stack well. For example, if I had known how the GIL works, I wouldn't have dismissed the fact that one of the threads was stuck in a regular expression C function. This was not a negligible detail (and not an excuse for dubious references to astrology). Moreover, I didn't really need either python-debuginfo or Winpdb. I could have just peaked into the function to see what parameters had been passed to it. Then, from the values, I could have guessed from where the call had originated in my Python code.

- Don't be easily seduced by good-looking GUIs. They can be very helpful in some situations, but often, a console-based debugger is better.

- Don't ignore subtle but weird details while debugging. They might matter more than you think.

- In retrospective, my bug was not a real deadlock per se, because a single resource was locked (the GIL!).

I hope that this post was useful to you, and if so, please share your comments in the comment section below!

Here, you can probably recognize the overused "it works on my machine". ↩

At the time, I didn't know that Pycharm shipped with PyDev's debugger, which supports remote debugging. If you're interested, there's a very nice discussion explaining how PyDev - which is an Eclise-based Python IDE - got its debugger forked and then merged with PyCharm's debugger. ↩

As a matter of fact, pdb has no internal command to switch between threads. See this. ↩

Fortunately, gdb already ships with CentOS 7, so you can use it right away. ↩

Because wxPython is required for Winpdb's GUI to work and it only runs under Python 2.7. ↩

Be careful to choose a package matching the bittage of your Python installation. Initially, I downloaded an x64 package of wxPython, while I had previously installed Python 2.7 x86, and they're incompatible. ↩

Because wxPython's files are unfortunately NOT copied when creating a virtualenv. ↩

On Windows, you just can't run 'pip install pycrypto', because you need to install a C compiler first. But honestly, it's just easier to find an already built package! ↩

The 'timeout' parameter specifies the number of seconds that the embedded debugger waits until it resumes execution of the host program. That is, unless a Winpdb client has already attached to the debugger. ↩

As per this discussion, with a custom version of Python, you absolutely need to use the '--prefix' switch with configure. When you run make install, this tells the compiler where to deploy Python's binaries. ↩

Of course, re-installing Python means that you need to re-install all of your dependencies. Having a requirements.txt file is really handy. ↩

Apparently, those tools are NOT copied to the compiled Python debug version, so this is why we need to call sys.path.insert(). ↩

In Python, every sre.SREPattern instance has a 'pattern' attribute, which is a string containing the actual regular expression. ↩

I got this insight by looking at the actual code of libpython.py. You could just do the same: vim /home/acme/debug/Python-3.3.6/Tools/gdb/libpython.py. ↩